Task Overview

CHiME-6 targets the problem of distant microphone conversational speech recognition in everyday home environments. Speech material has been collected from twenty real dinner parties that have taken place in real homes. The parties have been made using multiple 4-channel microphone arrays and have been fully transcribed.

The challenge features:

- simultaneous recordings from multiple microphone arrays;

- real conversation, i.e. talkers speaking in a relaxed and unscripted fashion;

- a range of room acoustics from 20 different homes each with two or three separate recording areas;

- real domestic noise backgrounds, e.g., kitchen appliances, air conditioning, movement, etc.

Fully transcribed utterances are provided in continuous audio with ground truth speaker labels and start/end time annotations for segmentation.

The scenario

The dataset is made up of the recording of twenty separate dinner parties that are taking place in real homes. Each dinner party has four participants - two acting as hosts and two as guests. The party members are all friends who know each other well and who are instructed to behave naturally.

Efforts have been taken to make the parties as natural as possible. The only constraints are that each party should last a minimum of 2 hours and should be composed of three phases, each corresponding to a different location:

- kitchen - preparing the meal in the kitchen area;

- dining - eating the meal in the dining area;

- living - a post-dinner period in a separate living room area.

Participants have been allowed to move naturally from one location to another but with the instruction that each phase should last at least 30 minutes.

Participants are free to converse on any topics of their choosing – there is no artificial scenario-ization. Some personally identifying material has been redacted post-recording as part of the consent process. Background television and commercial music has been disallowed in order to avoid capturing copyrighted content.

The recording set up

Each party has been recorded with a set of six Microsoft Kinect devices. The devices have been strategically placed such that there are always at least two capturing the activity in each location.

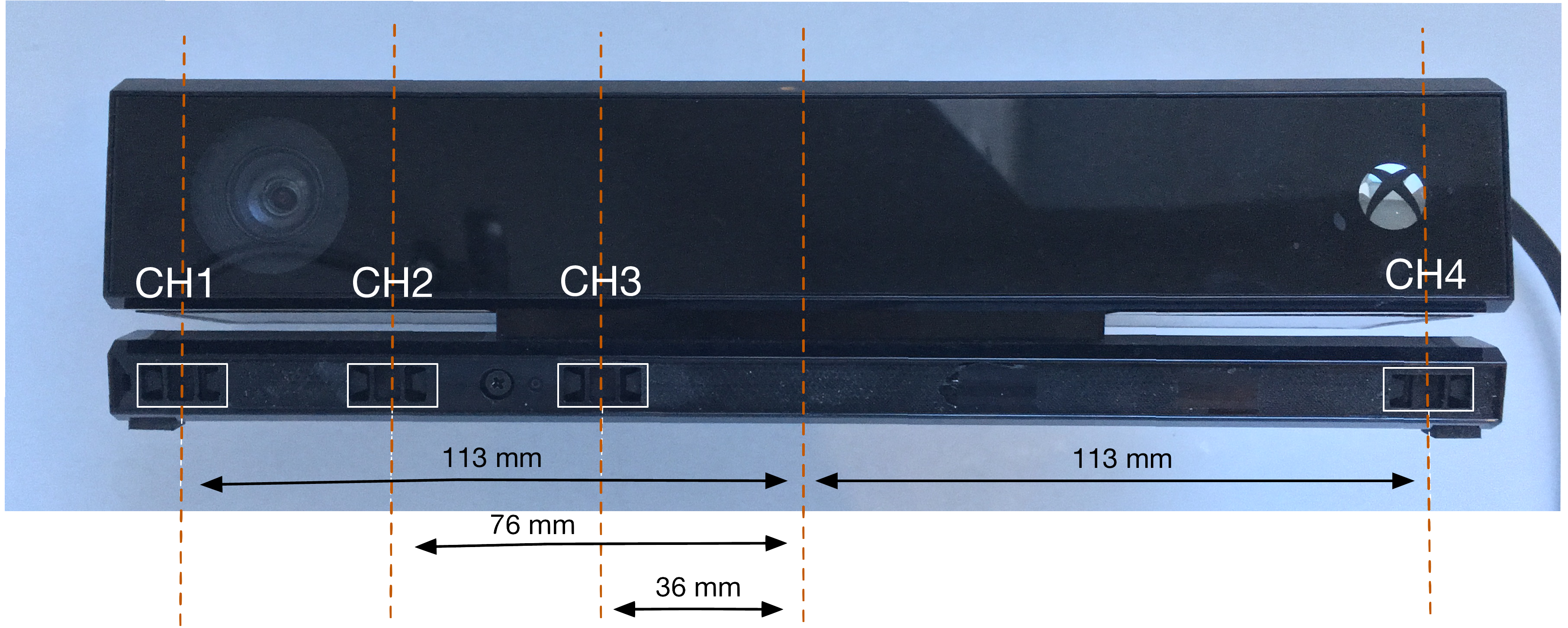

Each Kinect device has a linear array of 4 sample-synchronised microphones and a camera. The microphone array geometry is illustrated in the figure below.

The raw microphone signals and video have been recorded. Each Kinect is recorded onto a separate laptop computer.

|

|

|

|

In addition to the Kinects, to facilitate transcription, each participant is wearing a set of Soundman OKM II Classic Studio binaural microphones. The audio from these is recorded via a Soundman A3 adapter onto Tascam DR-05 stereo recorders being worn by the participants.

The recordings have been divided into training, development test and evaluation test sets. Each set features non-overlapping homes and speakers.

Tracks

For the first time, the challenge moves beyond automatic speech recognition (ASR) and also considers the task of diarization, i.e., estimating the start and end times and the speaker label of each utterance.

The challenge features two tracks:

- ASR only: recognise a given evaluation utterance given ground truth diarization information,

- diarization+ASR: perform both diarization and ASR

Both tracks are multi-array, i.e., all microphones of all arrays can be used.

Track 1 is a rerun of CHiME-5 and Track 2 is similar to the ``Diarization from multichannel audio using system SAD’’ track of DIHARD II, with the following key differences:

- an accurate array synchronization script is provided,

- the impact of diarization error on speech recognition error will be measured,

- upgraded, state-of-the-art baselines are provided for diarization, enhancement, and recognition.

For each track, we will produce two separate ASR ranking categories:

- A. systems based on conventional acoustic modeling and official language modeling: the outputs of the acoustic model must remain frame-level tied phonetic (senone) targets and the lexicon and language model must not be changed compared to the conventional ASR baseline,

- B. all other systems, including systems based on the end-to-end ASR baseline or systems whose lexicon and/or language model have been modified.

In other words, ranking A focuses on acoustic robustness only, while ranking B addresses all aspects of the task.